On AI and Robotics

Developing policy for the Fourth Industrial Revolution

In recent years a series of significant technological developments have made it possible for intelligent machines to carry out a greater depth and breadth of work than ever before. Artificial intelligence (AI) and robotic systems are disrupting a number of fields, although predictions differ significantly on the scale of the changes that will ultimately occur.

This publication is designed to help employers, regulators and policymakers understand the potential nature of these effects by reviewing a variety of application areas in which AI and robots are deployed, both individually and together. The more that is known about how different fields or industries might be disrupted, the better prepared institutions, companies and systems will be.

A number of researchers and associates of The University of Manchester have contributed, across a range of different specialisms, coordinated by Policy@Manchester. These insights cover advice in four key areas; hazardous environments, healthcare, research, and industry (covering employment and future technical progress).

Although the challenges that companies and policymakers are facing with respect to AI and robotic systems are similar in many ways, these are two entirely separate technologies. And though they can be combined in advanced systems, stakeholders should be careful not to conflate them when developing policies for the future. The section below explains the similarities and differences between AI and robotics.

Artificial Intelligence (AI)

AI is the name given to a collection of programming and computing techniques that attempt to simulate, and in many cases exceed, aspects of human-level perception, learning and analysis. It is commonly considered a part of computer science and involves techniques that cover a range of different areas including:

- Visual perception – identification of specific objects or patterns from unprocessed image data, such as those used in facial recognition systems.

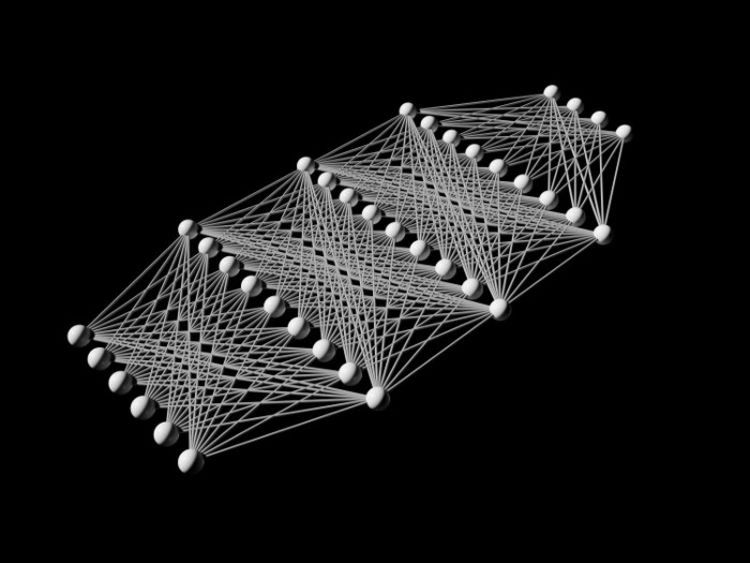

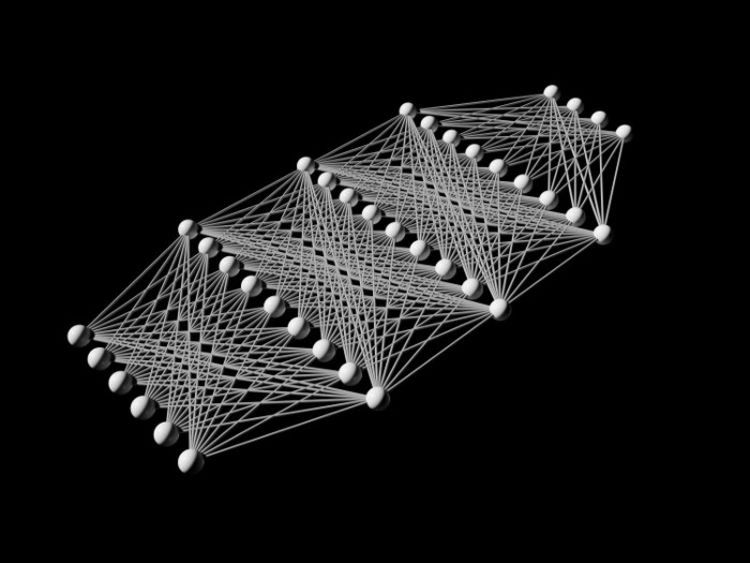

- Neural networks – models of brain function, with applications in learning and analysis.

- Speech and natural language processing (NLP) – discerning meaning from written or spoken text, used in some translation applications for example.

- Machine learning (ML) and deep learning – training computer systems to improve their ability to perform certain tasks based on examples. Learning systems are used in simple settings such as in recommendation algorithms that refine results based on user behaviour (deployed by Google and Netflix for example) as well as in more complex applications, such as game-playing systems like AlphaGo or for the computer game Starcraft II.

- Expert systems – tools that provide specialist information, in context, from existing databases and repositories for use by human operators. A common example is the use of decision-support systems in healthcare.

Although these examples cover a diverse range of areas, state-of-the-art technology today is often referred to as weak or narrow AI as it is only suitable for small and specific tasks. A longer-term goal of some in the field is to create strong AI, also known as artificial general intelligence (AGI), which would have much wider applicability, and is the form of AI that appears most often in popular culture.

AI technologies are fundamentally computer programs and perform in the same manner whether utilising real data or running simulations. Unlike robots, they do not require interaction with the real-world. However, while cutting edge research today is nowhere close to developing AGI, there are many promising narrow applications that involve real-world interactions, including the ability to control aspects of robotic systems.

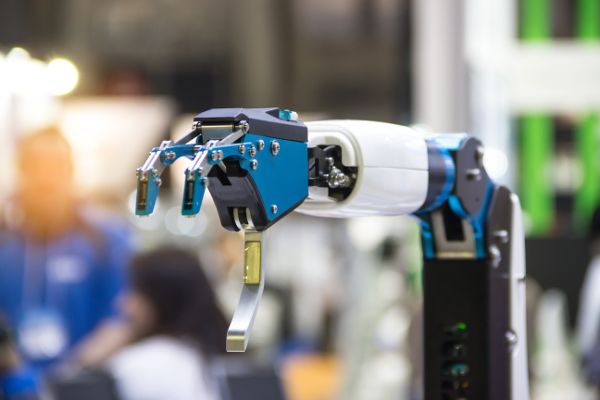

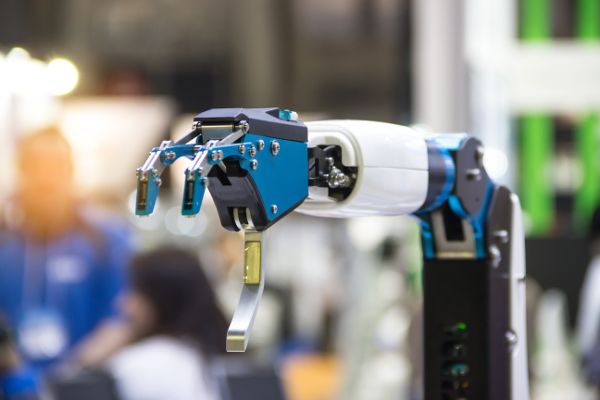

Robots

Robots are programmable machines that carry out physical processes and may be controlled by a human operator or an AI system (or, commonly, a combination of both). Robots are extensions to traditional machines with the ability to carry out potentially complex, multi-step processes, often incorporating sensing equipment to identify aspects of their environment.

Robotic systems are used in a wide range of applications such as:

- Carrying out repetitive industrial tasks such as moving materials, assembling parts, welding and painting, or loading other machines in factory environments.

- Collaborating with people in the workplace. Collaborative robots (known as cobots) operate alongside humans in a shared workspace to complete tasks together. Cobots often assist their ‘colleagues’ with moving and handling heavy loads.

- Performing useful basic tasks for consumers. Robots that operate autonomously to carry out simple tasks, such as vacuum or pool cleaning.

- Carrying out difficult procedures with human control and oversight. Professional service robots that can be human-operated, semi-autonomous or fully autonomous with human oversight are used in complex applications, such as in surgery.

- Intelligent robots and autonomous devices, sometimes referred to as artificially intelligent robots. Combining sophisticated environmental sensing capabilities with AI analysis and decision-making, such systems can perform complicated processes for extended periods without supervision. A prominent example in this category is an autonomous vehicle or self-driving car.

Robotic technology is already shaping the workplaces of tomorrow. The global market for industrial robots has been estimated at over $40 billion in 2017, and is predicted to grow to over $70 billion by 2023 according to a recent report by Mordor Intelligence.

Growth in the use of robotic systems and AI is likely to affect workers in different ways across many sectors, although predicting the extent of the overall impact on employment is very difficult. In the next section a summary of the current conversation about the scale and nature of changes to employment is included.

The jobs debate

Popular descriptions of technological progress are often accompanied by predictions of the impacts on workers. Where AI and robotics are considered, the anxiety that this can cause is arguably heightened by the fact that the technologies are highly versatile and have the ability to affect multiple industry sectors. However, there is significant divergence in predictions of the scale of these effects, and many factors need to be taken into account when attempting to understand the impacts on jobs.

In the UK for example a recent report by the Centre for Cities (an independent thinktank) predicts that jobs made up of routine tasks are those most exposed to automation, and this will affect cities across the north and in the Midlands to a greater extent than other parts of the country, potentially exacerbating regional divisions. The report states that overall one in five workers across the UK is in an occupation likely to shrink in the near future. However it also predicts that all cities are likely to experience jobs growth by 2030, with approximately half of this being in publicly-funded institutions.

In a different study, a recent Working Paper by the Organisation for Economic Co-operation and Development (OECD) dramatically scaled back previous predictions on employment disruption. The report expanded on an influential 2013 study by Carl Frey and Michael Osborne from the University of Oxford, which stated that 47% of jobs in the US were at a high risk of being automated. The new OECD Paper’s authors put this figure at just 10% which, while still representing millions of workers, is significantly lower.

The Paper explains that the change in the forecast was due to a new approach to analysing jobs. It states that the previous study exaggerated the effects of automation as it grouped jobs too broadly and didn’t take into account the range of tasks that people in occupations with the same name yet vastly different responsibilities might have. This further highlights the requirement to consider the nuances of industry sectors and roles that may be at risk due to AI and/or automation.

And the individual technologies should also be considered separately when relevant. For example, the World Economic Forum’s Future of Jobs Report partially echoes the Centre for Cities study by portraying a generally pessimistic opinion of AI with respect to jobs, stating:

“Overall, our respondents seem to take a negative view regarding the upcoming employment impact of artificial intelligence, although not on a scale that would lead to widespread societal upheaval—at least up until the year 2020.”

However the report paints a more optimistic picture of the job creation potential of technologies associated with the Fourth Industrial Revolution (Industry 4.0), including robotics. It states that Industry 4.0 technology could be a driver of employment growth across several sectors, particularly the “Architecture and Engineering job family.”

Professor Tony Dundon and Professor Debra Howcroft at The University of Manchester argue that instead of job losses it is far more likely that workers in many areas will see the nature of their role change. Jobs may instead become more codified and reduced to certain core tasks through automation, which could lead to growth opportunities as well as widespread alterations in working practices and requirements.

Industrial sentiment will also drive the adoption of these new technologies. This has been assessed for example in a recent PwC survey which found that;

“67% of CEOs think that AI and automation (including blockchain) will have a negative impact on stakeholder trust in their industry over the next five years.”

It is possible that such distrust could have knock-on effects to investment, earnings and jobs. However it may also slow down the integration of new technologies that could potentially displace workers. Technology editor Richard Waters at the Financial Times explains that we are in danger of overestimating AI, and when systems fail to live up to the hype, this could limit future investments in the technology.

Robotic systems may also suffer from similar barriers to industrial implementation. For example, Professors Dundon and Howcroft discussed the fact that of those companies in the US in a position to integrate automation, at present only 10% have done so.

Nevertheless, in spite of the barriers that may exist, AI and automation are expected to play a greater role in the workplaces of tomorrow, and many experts and studies agree that the nature of work is highly likely to change significantly for large numbers of employees as a result.

Professor Diane Coyle, former Director of Policy@Manchester, has previously written about the potential impact of AI and robots on employment, highlighting some of the differences in predictions discussed above. Professor Coyle advocates that policymakers take a more detailed look at the issues they may need to contend with in the near future to ensure that forthcoming changes to employees and societies are fairly distributed, explaining;

“For although the robot revolution, if that is what it turns out to be, might be good for humans in the long run – as past technologies have proven – it will also create winners and losers. There is a debate to be had about how to provide better for the losers next time around, and the time to have that discussion is right now, before the robots arrive, not after.”

The aim of this publication is to add to this debate, and raise awareness of the scale and complexity of the issues society needs to face today. In order to better understand the backdrop of such discussions the next section gives an overview of international approaches to develop policies for AI and robotics.

The international policy context

The role of governments, policymakers and regulators in dealing with the changes that AI and automation will bring is multi-faceted. Existing laws, standards and regulations for these emerging technologies will differ significantly across countries and territories, and approaches to supporting innovation while protecting workers and consumers will require the insights of experts from several fields.

The wide-ranging applications of the technologies demand comprehensive sets of policies that cover, amongst other things;

- Industrial strategies and productivity,

- Human safety, legal liability and risk,

- Data use, privacy and security, and

- Intellectual property development and protection.

Countries may have separate policy programmes for AI and robotics, or may combine them in large-scale industrial and research strategies, and existing laws will be challenged as new problems and opportunities occur.

To provide context in this area, this section gives an overview of some of the prominent international approaches to developing effective policies for AI and automation, with a closer look at initiatives in the UK.

Much of the material is adapted from the European Commission Digital Transformation Monitor's 2017 AI Policy Seminar: Towards an EU strategic plan for AI .

United States of America (USA)

The USA was the first country to develop a genuinely comprehensive AI strategy.

Launched in 2016, the National Artificial Intelligence Research and Development Strategic Plan encompassed AI, robotics and related technologies, and was designed as a high-level framework to direct effort and investment in research and development.

It focuses on seven key priorities:

- Make long-term investments in AI research;,

- Develop effective methods for human-AI collaboration,

- Understand and address the ethical, legal, and societal implications of AI,

- Ensure the safety and security of AI systems,

- Develop shared public data sets and environments for AI training and testing,

- Measure and evaluate AI technologies through standards and benchmarks, and

- Better understand the national AI R&D workforce’s needs.

Germany

Industrial manufacturing plays a major role in the German economy, and this has been reflected in government strategies for exploiting AI and robotics.

In 2013 an initiative to boost participation and capabilities in Industry 4.0 applications was launched, known as German Plattform Industrie 4.0.

In 2017 the German government built on this with a strategy specifically for the autonomous vehicle sector, advancing the country’s position as a global leader in car manufacturing.

Five generic action areas have been defined:

- Infrastructure,

- Legislation,

- Innovation,

- Interconnectivity and cybersecurity, and

- Data protection.

The United Arab Emirates (UAE)

In 2017 the UAE Government launched the UAE Strategy for Artificial Intelligence.

This strategic plan is a pioneering initiative in the Middle East region and includes a major focus on public sector services.

The strategy aims to:

- Achieve the objectives of UAE Centennial 2071 (an ambitious project to make the UAE the best country in the world by 2071),

- Boost government performance at all levels,

- Use an integrated smart digital system that can overcome challenges and provide quick efficient solutions,

- Make the UAE the first in the field of AI investments in various sectors, and

- Create a new vital market with high economic value.

China

The Chinese economy has been boosted by manufacturing and exports for many years.

In order to build on this foundation, and ensure the country’s infrastructure and businesses are modernising in order to compete in the future, the Made in China 2025 strategy was launched in 2015.

This is a comprehensive plan to upgrade Chinese industry, involving the development of new standards, capabilities and capacity for autonomous manufacturing processes.

Following this, in 2017 China released its New Generation AI Development Plan that detailed an approach to become the world leader in the field by 2030.

In order to achieve this aim China has developed a three-part agenda covering:

- Tackling key problems in R&D,

- Pursuing a range of AI products and applications and

- Cultivating an AI industry.

Japan

The Japanese government enacted the 5th Science and Technology Basic Plan in 2016.

This wide-ranging strategy covered aspects of innovation and internationalisation critical to the Japanese economy.

Part of the plan included approaches for developing a smart, technologically advanced and highly connected society known as Society 5.0, involving emerging innovation such as Internet of Things (IoT), AI and robotics.

This plan was followed in 2017 with an Industrialization Roadmap that detailed Japan’s approaches to developing and commercialising AI.

The Roadmap is made up of three organisational phases:

- Utilising and applying data driven AI developed in various domains (until 2020),

- Public use of AI and data developed across various domains (until 2025-2030), and

- Ecosystem built by connecting multiplying domains.

The European Union (EU)

The European Commission (EC) is leading a number of research, funding and regulatory programmes that aim to position the EU as a global leader in robotics and AI.

The EC has developed a public-private partnership for robotics in Europe known as SPARC.

SPARC has €700M in funding for 2014–2020 from the EC, and three times as much from European industry, making it the largest civilian-funded robotics innovation programme in the world.

In addition, the aforementioned 2017 AI Policy Seminar made four recommendations designed to enable an “ambitious European approach to supporting the development and use of AI”:

- Create a trusted regulatory framework,

- Train the EU workforce on AI,

- Attract private capital, and

- Enable SMEs to adopt AI by providing the necessary infrastructure.

United Kingdom (UK)

The UK Government has been developing a modern industrial strategy, involving investments in robotics and other Industry 4.0 technologies, suitable for a post-Brexit country.

In 2017 the UK also revised its existing Industrial Strategy in order to boost the AI sector and capitalise on its existing competitive advantages.

To grow the AI industry, four priorities were identified:

- Improve access to data by leading the world in the safe and ethical use of data and AI,

- Maximise AI research to make the UK a global centre for AI and data-driven innovation,

- Improve the supply of skills needed for jobs of the future, and

- Support the uptake of AI to boost productivity.

The UK is also developing a Centre for Data Ethics and Innovation to ensure safe and ethical R&D and new funding for regulators to assist in bringing innovation to market.

There are many more aspects of policy at a national and regional level that need to be considered in order to develop effective regulation for AI, robotics and their effects. Taking the UK for example, regulation is not driven by industrial strategies alone, though it plays an important role in introducing new technologies.

The role of policymakers and the state in supporting technological change was recently discussed in the Industrial Strategy Commission, an independent inquiry into the UK’s development of a long-term industrial strategy. The Industrial Strategy Commission was a joint initiative by Policy@Manchester and the Sheffield Political Economy Research Institute (SPERI) at the University of Sheffield. It explained that policymakers must balance ensuring that productivity gains are realised with fairly distributing benefits:

“The new industrial strategy should recognise the state’s essential role in driving technological innovation, and focus on diffusion, as much as disruption. It will be important for it to be informed by rigorous and critical analysis, drawing on the expertise of National Academies, Research Councils, and the wider international academic and business communities. It should embrace technological change and seek to capture the benefits, but a critical perspective to occasionally overstated claims is always necessary.”

In addition, the Parliamentary Office of Science and Technology (POST) stated in its Topics of Interest 2018 briefing note that:

- The UK government predicts that robotics and autonomous systems (RAS) have the potential to increase UK productivity across the economy by up to £218 billion.

- The Boston Consulting Group predict that robots will become cheaper and more efficient in the coming years, replacing human workers faster than expected while driving labour costs down by 16%. Automating tasks previously undertaken by human workers may lead to the loss of significant numbers of existing jobs while potentially creating new types of jobs with different skills requirements.

The briefing note also explains that POST considers the range of legal and ethical questions raised by the use of systems that are intelligent and/or operate autonomously to be very important regulatory challenges for the future. However, a number of bodies have carried out work on these issues already, and it is important that these studies are considered when formulating policy.

In 2017 for example the UK Government commissioned an independent review on AI led by Professor Dame Wendy Hall and Jérôme Pesenti. The resulting report, entitled Growing the Artificial Intelligence Industry in the UK included 18 different recommendations covering;

- Access to data,

- Skills and capacity building,

- Improving research, and

- Supporting AI uptake.

The review built on previous work undertaken by a range of organisations in the field, such as the Royal Society’s report Machine learning: the power and promise of computers that learn by example. This report also stressed the need to educate tomorrow’s workforce so that the potential of AI in industry can be realised. In addition, the report discussed the importance of an effective data environment, with relevant open data standards and access issues considered in detail.

A number of the Royal Society’s recommendations were reflected in Hall and Pesenti's independent review, which was itself used by the UK Government to direct spending in the Autumn Budget 2017. Over £75 million is to be invested in education and research programmes, including new AI fellowships and PhD placements, and in the development of a Centre for Data Ethics and Innovation.

This body is planned to pioneer approaches to ensuring safety and ethical behaviour in data-driven technologies, such as AI. It will work with a wide range of stakeholders from the public and private sectors and will sit alongside other newly conceived bodies such as an AI Council and a Government Office of AI. However, at present it is not yet clear how the activity and responsibilities of these proposed organisations would overlap.

The technologies such bodies will be tasked with overseeing are highly adaptable and have the potential to affect everyday life in a number of ways, several potentially unforeseen, many of which may need some form of regulation. The wide-ranging nature of AI for example was highlighted in an extensive report produced by the House of Lords Select Committee on Artificial Intelligence.

This 2018 consultation gathered evidence from a large number of stakeholders across many organisations, with the findings summarised in AI in the UK: ready, willing and able? The Committee advocates the development of a national policy framework for AI, calling for greater regulation in some areas. In the aforementioned Autumn Budget the Government have already committed to establishing a new £10 million Regulators’ Pioneer Fund in order to assist regulators in bringing innovation to market.

In addition, in 2018 the UK Government also launched an AI sector deal. This £1 billion pound strategy reflects the support of the private sector and addresses several areas of development needed to ensure the UK can become a highly competitive force in the industry.

AI and robotics are fast-moving technologies making effective regulation a significant challenge for policymakers. The examples above cover some of the key areas of policy development in the UK, but there are also other global initiatives from the private and third sector such as:

- OpenAI – founded by Elon Musk and Sam Altman, this non-profit AI research company intends to develop safe artificial general intelligence.

- The Global Initiative on Ethics of Autonomous and Intelligent Systems – created by the Institute of Electrical and Electronics Engineers (IEEE), this initiative is attempting to build broad consensus on the ethical issues that surround AI and robotic technology uses.

- The Malicious Use of Artificial Intelligence report – this 2018 report was produced by a number of authors from academia, industry and civil society, and made high level recommendations to policymakers in order to drive safer AI development.

How the proposed and existing policies, and the activity of new organisations, are actually implemented remains to be seen. Yet all stakeholders should be aware of the potential areas of operation that may be affected by new technologies, and what those changes might look like.

To assist in this, the rest of this publication provides examples of such impacts in a number of fields, beginning with applications that require technology able to withstand environments in which humans cannot operate effectively.

In hazardous environments

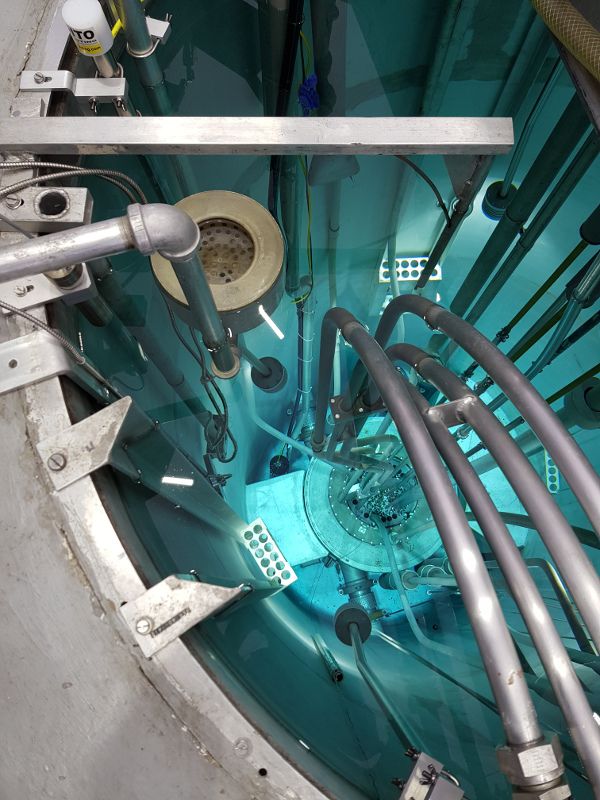

Environments that are too difficult or dangerous for people to work in are an important application area for AI and robotic technology. A successful example is the Robots and Artificial Intelligence for Nuclear (RAIN) project which involves several researchers from The University of Manchester based at the UoM Robotics Group. Funded by the EPSRC, the RAIN project is creating community hubs that bring together experts in robotics, AI and the nuclear industry in order to develop new tools that meet the demands of the sector.

Nuclear power facilities are heavily regulated environments with various forms of hazardous and radioactive material that make it very difficult, if not impossible, for human workers to carry out certain tasks. The UoM Robotics Group is working in partnership with Sellafield Ltd, one of the UK’s primary nuclear decommissioning sites, and its only fuel reprocessing facility, to test new solutions for mapping and monitoring parts of the site.

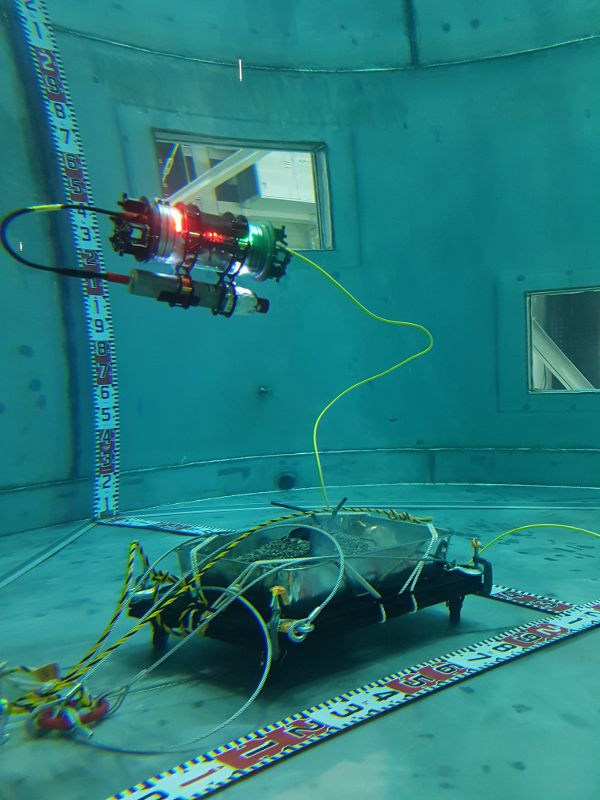

The Sellafield site was initially constructed as a Royal Ordinance Factory during World War Two, and is also adjacent to Calder Hall, the first nuclear power station in the world to generate commercial electricity at industrial scale. It now contains around 80% of the UK’s nuclear waste, much of this submerged in large underwater siloes and ponds. This waste is highly radioactive and almost impossible to access or analyse in many areas without remotely operated vehicles such as AVEXIS, built by UoM Robotics.

This work has also been used to support the decommissioning activities at the Fukushima Daiichi Nuclear Power Plant in Ōkuma, Japan; the site of a large nuclear incident following a tsunami in 2011. UoM Robotics researchers helped to build and deploy robotic vehicles with neutron and gamma detectors that have been deployed into a model of the flooded reactors at the Naraha research facility. The robots were able to safely locate and analyse simulated fuel debris, assisting in international efforts to deal with the enormous ongoing challenge that Fukushima faces.

In addition, hazardous environments are not only areas underwater, but can also be found in other parts of facilities that are too difficult to access, or where radiation levels are too high for humans. UoM Robotics have also developed vehicles to monitor such locations, such as the MIRRAX – 150. This system can access rooms through circular ports with a diameter as small as six inches, and then reconfigure its shape to travel around at higher speed, even climbing stairs if needed. A range of monitoring equipment such as HD cameras and radiation sensors can also be incorporated to map and analyse materials, enabling challenging locations to be safely examined. Here it is in action:

A nuclear reactor in Slovenia used to test the AVEXIS system

A nuclear reactor in Slovenia used to test the AVEXIS system

AVEXIS searching for simulated fuel debris in Japan

AVEXIS searching for simulated fuel debris in Japan

Such innovative technology suitable for hazardous environments requires dedicated research and development to meet specific dangers and challenges. New intelligent robotic systems will help human operators carry out tasks that have never before been possible, keeping more people safer and enhancing productivity across industries. But there are barriers to commercial applications that need to be overcome.

Barry Lennox is Professor of Applied Control at The University of Manchester and part of the UoM Robotics group. His work developing intelligent robotic systems, such as those detailed above, alongside industry stakeholders has afforded him an in-depth perspective on the issues that companies and researchers will face when trying to incorporate new technology. As he explains;

"It is really important that regulators are aware of what robotic technology is and is not capable of doing today, as well as understanding what the technology might be capable of doing over the next 3-5 years. "

"There is also a requirement to establish how new technology should be demonstrated to convince regulators and nuclear operating companies that it can be safely deployed into sensitive environments and provide benefits. Where established systems that clearly demonstrate the ability to solve specific industry challenges exist, policymakers and regulators should encourage the deployment of the technology into industry, particularly in situations where it removes people from harm."

Professor Lennox also explains that industrial actors have certain responsibilities in this process, and offers advice to help ease the introduction of innovative systems:

- "All levels within organisations (from senior management to the equipment operators) will need to be made aware of the current state-of-the-art in robotics technology, and how it might impact their industry in the short-term.

- Training should be provided at appropriate levels to ensure that the organisation is able to make the most of robotic systems when they are incorporated.

- Organisations should be encouraged to introduce ‘low consequence’ robotic systems (i.e. robots that aren’t going to cause problems even if they do malfunction). While such robotics may not provide significant cost savings initially, they will support the translation of the technology into the organisation. If staff see robots reliably performing routine tasks, such as remote inspection, then this will increase the confidence that the workforce has in the technology in other areas.

- The transfer of robotics technology into industry, and in particular the nuclear industry, requires cultural and societal changes as well as technological advances. One of the projects currently on-going in the Dalton Nuclear Institute's nuclear and social science research network the BEAM is exploring social and cultural features of the nuclear industry and how they shape attitudes and the approach the nuclear industry takes to risk, change and the introduction of innovation. "

Of course, greater industrial use will have an effect on employment and workplaces, as discussed throughout this piece. In Professor Lennox’s opinion these effects will probably take the following form:

"In the short-term, robotic systems are likely to have the biggest impact on activities which are repetitive and undertaken in structured environments (i.e. where you know where everything is and there is little or no uncertainty). In these situations the robotic system is likely to improve productivity (as it has done in the automotive industry for example), creating new roles and business opportunities. Such changes would have other subsequent impacts; in the nuclear decommissioning industry for example, the increased use of innovative robotic systems could lead to an acceleration of the rate at which facilities can be decommissioned, which will have knock-on effects to financial and employment plans. "

"It is clear that the introduction of robotic systems can lead to a reduction in the risks that people are exposed to in certain industries, as has been shown in the nuclear industry. Alongside the remote vehicles discussed for example, many workers in nuclear facilities perform operations with active materials in glove boxes. If robotic systems could be used to remove the worker from the hazardous material in these processes, then the risk of harm is reduced, though the nature of the operator’s job will change (which may require re-training). Technology in this area is progressing very quickly and this application should be possible in the next 3-5 years."

Hazardous environments are very attractive application areas for new technologies due to the potential to make people safer. In a similar manner, healthcare and medicine are also significant areas of interest for the proponents of new technology.

In healthcare

Healthcare is a challenging domain for innovation for a wide variety of reasons. Market forces, regulation, highly controlled regulations and environments, social and cultural issues, and the scale of the risks involved all play a part.

Technology phas many roles in healthcare, from AI decision support systems to robot surgeons. For example, Accenture recently worked with Age UK on a pilot program that investigated the use of AI to help older people manage their care and well-being. An AI-powered platform, incorporating voice recognition, was developed that enabled users and their families to access a range of useful data, from finding local events to checking whether medication was being taken on a daily basis.

While there are many isolated examples in this area, global health challenges require global responses, and the complexity and scale of the issues are often highlighted as motivation for implementing solutions involving AI. An important example of this is the international effort to limit the spread of antimicrobial resistance (AMR).

In recent years rapidly increasing resistance by bacteria to a range of previously successful treatments has become a prominent global issue, driven by a more mobile global population and lack of new antibiotic development. In the 2016 Review on Antimicrobial Resistance it was estimated that 700,000 people die annually due to drug resistant infections, and this could rise to 10 million a year by 2050. In addition, this is also predicted to result in a potential cumulative $100 trillion of lost economic output.

The problem is considered to be at crisis level by the United Nations (UN), and in 2016 Dr. Margaret Chan, the former Director-General of the World Health Organization (WHO), said “We are running out of time.” Predictions of worst case AMR scenarios involve large parts of traditional medicine being rendered useless. Routine, elective and preventive surgeries become riskier than leaving conditions untreated, and in some cases there is a real possibility of doctors being forced to inform patients that there is nothing more they can do for them.

Governments, charities and businesses are now looking to new technology to help address the issue. There are emerging applications for the use of AI and automation in the development of new drugs, an area that Professor Ross King at The University of Manchester has been working on for several years, as discussed further below. However, research and production of new antimicrobial treatments has not been a profitable area for drug companies, due to a variety of market factors. Recommendations to address this have been made in the 2016 Review on Antimicrobial Resistance, which discusses proposed market entry rewards and other financing requirements needed to make new development a reality.

While such antimicrobial supply-side improvements are pursued, technology can also play an important role in attempting to limit AMR with the treatments available today. In particular, rapid diagnostics and AI systems may be deployed in healthcare settings in two areas; clinical decision-making and AMR monitoring:

Clinical decision-making – rapid diagnostic tests enable doctors to identify the precise nature of an infection faster and more accurately, and hence treat it accordingly with more a relevant prescription. In addition, there are many emerging examples of AI being used in clinical decision support (from symbolic AI care guidelines to ‘cognitive systems’ such as IBM Watson) in order to assist healthcare professionals make better choices on how to treat and care for a patient, and these can work with, or enable, faster diagnostics.

Alongside systems that proactively support prescribing and treatment choices for infection patients, AI may also be able to play a role in supporting clinical best practice through monitoring actions. Data on clinical interventions and their context can be used to provide an understanding of the behavioural factors that get in the way of best practices, and provide recommendations on how they can be reinforced or adapted.

AMR monitoring – if it is possible to monitor more of the factors that affect the spread of AMR in real-time at a population level, more targeted large-scale interventions can be made. There are many factors to take into account such as infection rates, antibiotic use in agriculture, microbe levels and movement observed in watercourses, prescription activity at a national and international level and more. AI and big data solutions could be implemented to improve the tracking and analysis of such data in order to make meaningful recommendations. International cooperation will be required to fix surveillance blind spots and new technology can help develop a system for monitoring information on drug resistance information in almost real-time.

In order to support the development of these solutions policymakers should work with healthcare providers to provide access to the technical environments in which new technologies would operate. If developers can safely and securely utilise the tools, systems and architecture that health services and institutions currently use, then it will be easier to test and deploy novel technological solutions that help combat the spread of AMR. The approach can also be adapted for other health challenges.

Lord Jim O'Neill is an Honorary Professor of Economics at The University of Manchester and headed the Review on Antimicrobial Resistance. He explains;

“The AMR Review gave 27 specific recommendations covering 10 broad areas, which became known as the ‘10 Commandments’. All 10 are necessary, and none are sufficient on their own, but if there is one that I find myself increasingly believing is a permanent game-changer, it is state of the art diagnostics. We need a 'Google for doctors' to reduce the rate of over prescription.”

One of the major barriers to overcome in the development of national or international responses to global health challenges such as AMR is the lack of an effective data sharing infrastructure. Data access is often cited as a key variable in the efficacy of any AI system, and in healthcare there are multiple issues of data privacy, sensitivity, anonymity and liability that need to be overcome. Effective monitoring systems will require policymakers to develop consensuses on how such data is gathered, shared and used.

Building such an architecture is both a technical and a cultural challenge. It will require effective data and metadata standards, as well as data labelling solutions to enable technical analysis by intelligent machines, where relevant. In addition, stakeholders at all levels must be happy to share their data, and it must be legally allowed.

As explained by Professor Niels Peek, Director of Greater Manchester Connected Health City and former President of the Society for Artificial Intelligence in Medicine (AIME):

“We need to develop infrastructures that simultaneously support healthcare practice and clinical research, creating an environment in which these two activities are perfectly aligned and form the basis of a rapid learning health system.”

The Connected Health Cities programme involves four city regions across the north of England who are working on projects that integrate care delivery with research in order to speed up innovation. As part of the programme Professor Peek’s team is working with NHS and Public Health England to better understand the drivers that lead to antibiotic prescribing in primary care settings.

This work involves the use of anonymous patient data collected from GP records, A&E departments and out-of-hours clinics, analysed in a secure environment. This an example of one of the many Connected Health Cities projects that involve sensitive information and demonstrates the care and thought that needs to be put into exactly how such data is handled at every stage of the process.

A global response to AMR involves addressing this challenge at even larger scales, possibly involving AI systems with the ability to analyse, make inferences from, and deploy insights based on patient data. Efforts to develop the required data infrastructures would benefit from the lessons learned in projects such as those in the Connected Health Cities programme.

Dr Iain Brassington is a Senior Lecturer at the Centre for Social Ethics and Policy in The University of Manchester. He points out that issues faced in developing effective governance for a shared data infrastructure are substantial:

The people to whom the healthcare information refers may not be able to give full consent for their information being used in different ways, not least because possible uses for data may be unforeseen when consent is sought and given. One of the characteristics of big data is that it’s very good at spotting correlations nobody expected or that no human analyst could identify. There’s therefore a problem in respect of those insights, inasmuch as that there may be things discovered about persons that those persons had no idea would or could be discovered, and with who has access to the datasets.

This may be particularly worrisome when it comes to things like gaining health insurance, or in countries in which healthcare needs are associated with behaviour that is against the law. Each dataset alone may be straightforward, but when combined there might be all kinds of problems with identifiability. For example, information about the demand for treatment for alcohol misuse may, when combined with other datasets, make it possible to identify people with that particular health problem; and in a state with prohibitory laws, this may exacerbate patients’ vulnerability. This issue is made more acute by the possibility of data being shared between jurisdictions.

In addition, approaches taken in this important and challenging area, and in related fields, will have wide applicability in implementing other systems that can work across geographical boundaries and require large-scale coordination. Global cooperation is needed in many areas, such as travel and traffic systems, and international business and supply chains discussed below. Policymakers who engage with the ongoing developments in combating AMR have the opportunity not only to help address a serious and growing problem, but also to learn lessons that can be used in a variety of other areas.

The SpiNNaker architecture is made up of nodes communicating simple messages, known as spikes, that are inherently unreliable. This innovative approach is enabling researchers to discover powerful new principles of massively parallel computation.

The SpiNNaker architecture is made up of nodes communicating simple messages, known as spikes, that are inherently unreliable. This innovative approach is enabling researchers to discover powerful new principles of massively parallel computation.

Modelling human health

Alongside applications that involve using AI and robotic technologies to improve medical practices, the systems are also being deployed in research settings to improve our understanding of the human body. Models of human physiology and biological processes enable the acquisition of new insights into health, and the effectiveness of medical interventions.

For example, researchers at The University of Manchester Advanced Processor Technologies (APT) Research Group are assisting in the development of a new computer architecture inspired by the design and workings of the brain in the SpiNNaker project (an acronym for Spiking Neural Network architecture). They aim to build a synthetic model of the human brain from a million embedded Acorn RISC Machines (known as ARM chips) to build a massively parallel computing platform.

The SpiNNaker architecture is made up of nodes communicating simple messages, known as spikes, that are inherently unreliable. This innovative approach is enabling researchers to discover powerful new principles of massively parallel computation.

The finalised model will be able to demonstrate around 1% of the capacity of a human brain (which has approximately the same computing power as the brains of ten mice) and is built in a different manner to traditional supercomputers due to the biological basis of the design. There are many challenges to overcome when modelling complex structures that occur in the human body, particularly the brain, as shown in this video:

It is this very structural complexity that will enable the SpiNNaker project to imitate aspects of brain function. This will enable simulated ‘drugs’ to be applied to the model so their effects may be studied, resulting in a model that is both based on and contributes to modern neuroscience.

One of the principal researchers on the project Professor Steve Furber, co-designer of the ARM chip, and ICL Professor of Computer Engineering at The University of Manchester. He explains the importance of initiatives such as the SpiNNaker project:

"Medicine is already a very active area for computer modelling research, from designer drugs (where modelling is at molecular level) through to aphasia rehabilitation (using computer models to determine which therapy is most likely to be effective for a given patient). "

"Medicine has moved a long way from empirical experiments with random drug-candidate chemicals towards disease understanding, in which computer models play a role, leading to drug specification and design. Of course, there is still a lot of empirical testing required, with unexpected outcomes such as Viagra, which was originally designed for soft-muscle treatments. It was rather ineffective for the intended use, but then some interesting side-effects were reported… "

The enormous hardware and software challenges faced by the SpiNNaker team also show the difficulty involved with creating truly strong AI. Professor Furber explains that although our brains run on approximately the same power as a small lightbulb, current technology is nowhere near as efficient for building artificial alternatives;

“For a full-scale computer model of the human brain, we’d be looking at a machine that would need to be housed in an aircraft hangar and consume tens of megawatts,”

“It would need a small power station just to keep it going.

As a discipline healthcare delivery is closely aligned with research, and AI and robotics are finding several applications in this area. In addition, the technologies are also being used to improve aspects of the research process itself.

In research

While innovative new technologies are brought to market as the result of research, they can also help improve the acquisition of knowledge itself. Robotics systems are automating routine processes, sometimes at scale, freeing up researchers to be more productive and follow new creative insights. At the same time, emerging AI capabilities are helping researchers make sense of vast amounts of data, and applying new learning techniques to existing problems.

The potential applications go beyond simply monitoring data streams and recognising patterns hidden to the average human user. Intelligent systems are now able to predict and react to changes in the data, determine new avenues of enquiry and develop experiments to pursue ideas.

A prominent example of AI and automation in research is the work of Professor Ross King from the Manchester Institute of Biotechnology at The University of Manchester. His team have developed a ‘robot scientist’ called Eve, pictured in this section.

Eve carries out complex processes in biotechnology autonomously, running for many hours at a time without human supervision. This is made by possible due to recent advancements in robotics, AI and access to large-scale datasets.

The system features two robotic arms and a series of tracks and lifts that can transport and manipulate standard sized well plates containing biological samples. A number of different sample preparation and analysis instruments are integrated, and an intelligent software system manages the entire setup, making logical predictions akin to human hypotheses, testing their veracity, analysing the results and adjusting the approach.

In a recent project for example Eve discovered that a drug triclosan, commonly used in toothpaste, can be effective against malaria. This work, led by researchers at the University of Cambridge, identified that triclosan can inhibit the growth of malaria-causing parasites, possibly preventing the disease from spreading in the body.

Eve is able to process vast amounts of data far quicker than a human operator, and extract useful information from it more easily. The triclosan experiment demonstrates the system’s ability to carry out high throughput analysis based on these data, making multiple measurements autonomously, and through the night, in order to refine the approach.

As Professor King explains:

“Artificial intelligence and machine learning enables us to create automated scientists that do not just take a ‘brute force’ approach, but rather take an intelligent approach to science.”

The forerunner to Eve was the robot scientist called Adam, developed at the University of Aberystwyth, which is regarded as the first machine to independently develop new scientific knowledge. Professor King’s work now also involves considering how such new knowledge can be owned and used, beyond research.

One of the major issues debated in this area is how it may be possible to legally protect knowledge developed by a machine. Patent law in most countries usually refers to discoveries by humans, and certain adjustments may be needed to account for the knowledge developed by machine – though this is yet to be tested to any great extent in court.

This is a challenge not just in research, but also in the business operations of the future. As a recent PwC report states:

“At the heart of the opportunity for businesses is the ability to turn data into intellectual property (IP) – more than 70% of business leaders in our survey believe that AI will be the business advantage of the future.”

Although AI is likely to bring significant new capabilities to businesses, companies will need understand how to protect the value that newly developed knowledge will bring. In addition, in regulatory terms the learning capabilities of AI systems is also a challenge. In developing effective regulation there is fundamental problem with any deployed system that continues to learn, as its future behaviour may be hard, if not impossible, to predict.

Policymakers and regulators will need to be careful to develop oversight rules that balance ensuring the benefits of a system’s learning abilities are exploited (i.e. that there is flexibility for it to learn, test and deploy new strategies), with protecting against the negative effects of future unpredictable behaviour as a result of this learning (i.e. it should be possible to limit the system’s flexibility to implement what it has learned when necessary).

In order to facilitate this, policymakers should proactively engage with experts and try to understand as much as possible about how such technologies will work in order to create better regulation. And these challenges will soon be faced by regulators across many sectors; not just in research, but also in industry, as intelligent systems continue to revolutionise working environments.

In industry

Industrial environments are constantly changing as new business priorities and innovation opportunities arise. The current period is often called the fourth industrial revolution or Industry 4.0, referring to the potentially disruptive effects of several emerging and maturing technologies.

Chief among such technologies are AI and robotic automation, which have the potential to revolutionise many aspects of industrial environments whether deployed separately or together.

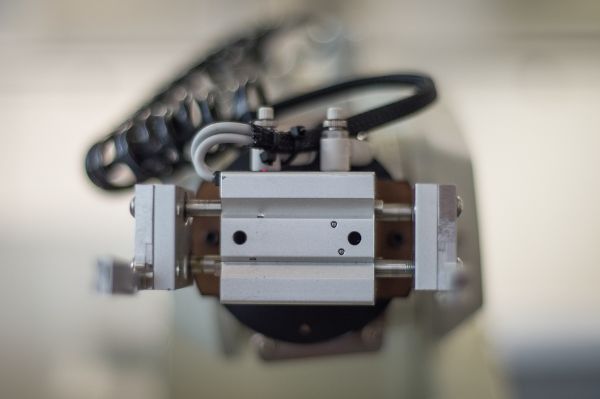

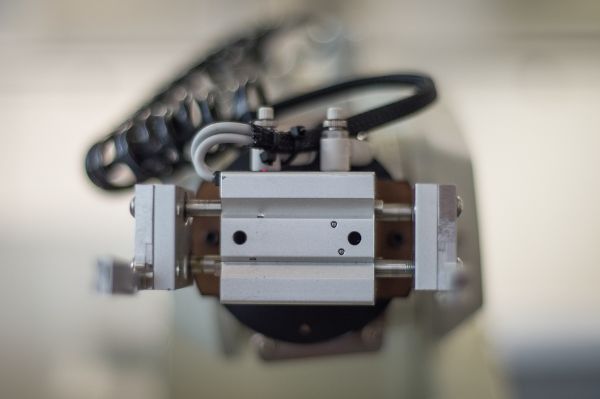

Dr Carl Diver is a Lecturer in the School of Mechanical, Aerospace and Civil Engineering at The University of Manchester, and studies various aspects of Industry 4.0. In the videos below he gives some examples of how AI and robotic systems are being used in industry:

One area that is of particular interest to many companies at present is the use of collaborative robots (or cobots):

Incorporating such new technologies can be particularly difficult for smaller businesses:

There is a need to develop training and education programmes for workers at all levels:

Engagement with all relevant stakeholders is also important:

To look more closely at these issues, this section contains two contributions from researchers in different fields. The first discusses the use of robotic systems in agriculture, and the second covers AI in the workplace and beyond.

The agri-food industry

The global food chain is under a variety of pressures due to climate change and the impacts of population growth, movement and ageing. A secure food chain is vitally important for the world, and it is also a sector with enormous economic potential. Agri-food is the largest manufacturing sector in the UK for example, and it supports a food chain that generates over £108 billion a year and employs 3.9 million people.

In order to deal with the issues the industry faces, leaders are increasingly turning to technology, and in particular robotics and autonomous systems (RAS). At The University of Manchester a team of researchers has been assessing the opportunities and barriers for RAS as part of the N8 AgriFood project.

Professor Bruce Grieve is the N8 Chair in Agri Sensors & Electronics and Director of the e-Agri Sensors Centre at the university. He has highlighted several potential applications for RAS in agri-food:

- The development of field robots that can assist workers by carrying weights and conduct agricultural operations such as crop and animal sensing, weeding and drilling,

- The integration of autonomous system technologies into existing farm operational equipment such as tractors,

- Robotic systems to harvest crops and conduct complex dextrous operations, and

- The use of collaborative and “human in the loop” robotic applications to augment worker productivity and advanced robotic applications, including the use of soft robotics, to drive productivity beyond the farm gate into the factory and retail environment.

Professor Grieve explains that researchers envision;

... a new generation of smart, flexible, robust, compliant, interconnected robotic systems working seamlessly alongside their human co-workers in farms and food factories. Teams of multi-modal, interoperable robotic systems will self-organise and coordinate their activities with the “human in the loop”. Electric farm and factory robots with interchangeable tools, including low-tillage solutions, novel soft robotic grasping technologies and sensors, will support the sustainable intensification of agriculture, drive manufacturing productivity and underpin future food security.

In order to realise the opportunities that RAS can bring to the sector, the N8 AgriFood team have developed a number of recommendations for policymakers, funding agencies, industry stakeholders, and research centres. Professor Grieve explains that these are:

- The UKRI, including Research England, funding is required to train and expand human expertise for Agri-Food RAS. This may include CDTs but should also provide provision for lower level skills development through to apprentice level.

- The community needs to be defragmented. We recommend the investment in Network+ grants to stimulate and condense the community alongside the establishment of larger scale Agri-Food RAS hubs. We recommend these hubs are virtual and multi-centred, working tightly with farmers, companies, and satellite universities to create the infrastructure to catalyse RAS technology. We do not believe any single UK centre currently provides the scope and capacity of expertise to deal with all the fundamental RAS challenges facing the sector. Given the global impact of Agri-Food RAS, we also recommend the UK secures international collaboration to accelerate RAS technology development.

- We recommend any new centres and networks must comprise academic and industry agri food domain expertise (crop and animal scientists, farmers, agricultural engineers) to ensure RAS solutions are compatible with industry needs. We believe many solutions to agri-food challenges will come from integrating RAS with more traditional technologies (agricultural engineering, crop and animal sciences etc).

- The UK Research Councils, such as the EPSRC, STFC, ESRC, BBSRC, NERC & MRC would benefit significantly from a coordinated Agri-Food research foresight review that integrates RAS technologies. As it stands, EPSRC will not fund Agri-Tech, but BBSRC will only fund Agri-Tech projects in which the bulk of the work is biology-related. If we are going to develop valuable new agri-robotic technologies, there needs to be a way to fund research where the application is agriculture, but the research itself is pure engineering / robotics. A foresight review would recognise the complex cross-disciplinary challenges of Agri-Food per se, but also how RAS can be integrated into a wider program.

- To deliver impact, Agri-Food RAS needs to integrate multiple technologies and resolve significant interoperability issues. We recommend that UKRI commissions a small number of large scale integration or “moon shot” projects to demonstrate routes to resolve these issues and deliver large scale impact.

- With the new changes there is a big potential of cooperation with China, India and other countries where the so-called Global Research Engineering Challenges still need immediate solutions. The UK has an instrumental role in this process and at the same time these new collaborations have the potential to open new avenues.

- The ongoing and large-scale government investment behind high TRL (e.g. Innovate UK led) research addressing agri-food sector needs is impressive. However, these investments will not succeed without investment in large-scale lower TRL research.

Ensuring a sustainable, safe and high quality food supply is a an important challenge for the future for an array of stakeholders. Incorporating new technologies successfully will help to meet growing demand and alleviate the increasing pressures the sector currently faces. But there will be risks associated with such innovation, as discussed in the next essay.

AI and robotics – promises and risk management

By Professor Vasco Castela

Sooner or later we will build machines with human-level intelligence. There is no compelling reason to believe that this is impossible; that we cannot create a machine that both behaves intelligently, and even has a consciousness, along with the emotions that come with it. After all, we already know that machines can think and feel, for humans clearly are (biological) machines, as 18th century physician and philosopher La Mettrie pointed out. It would be strange indeed if it turned out that intelligence and consciousness can arise by natural selection alone, but cannot ever be built by human beings, who can harness the power of both design and natural selection, with the help of computer models that implement evolutionary processes.

Human-level AI will likely exist, but not this year, and perhaps not this century. Since the 1950s, there have always been experts claiming this is just around the corner, but it is likely that we have really just started, and that there is much to be done. A common metaphor used to describe the situation is that we’re making good progress climbing a tree on our way to the moon – meaning both that we’re still very far from our goal, and that although we are advancing, there is no guarantee that we can keep going along our current path, and may have to try something altogether different.

New approaches to AI and robotics are paying off

Even though we still very far from achieving human-level AI, that doesn’t mean that we haven’t had much progress in the field. Interestingly, much of the progress achieved in robotics in the last few decades is connected to becoming more humble in our aims, with many researchers abandoning the goal of directly aiming for human-level AI. This has happened in two ways. First, instead of aiming to build a mind, which involves making massive assumptions on how we think, we are now trying to build a learning brain. We have been moving from strictly symbolic computer programming, where we directly and explicitly issue orders to the machine (in effect simulating conscious reasoning), to the increased use of artificial neural networks, where the machine does a lot of its own learning, and the programmer does not even fully understand why it does what it does. Secondly, we have moved to aiming for insect or animal-level cognitive skills, both as an end in itself and as a basis for higher cognition.

The idea that we should start with a foundation of basic bodily cognitive skills such as navigation and obstacle avoidance - of the kind we find in insects and animals - before we can consider aiming for human-level general problem solving, was outlined in a 1990 paper by MIT’s Rodney Brooks entitled Elephants Can’t Play Chess. Rodney Brooks later co-founded robotics company iRobot, where he developed, among other products, the autonomous vacuum cleaner Roomba. The navigational skills and speed of these relatively common household robots may seem ordinary now, but their performance was unthinkable in the early 1980s, where autonomous robots took hours to achieve basic tasks.

Crucially, the burst of progress in robotics that started in the 90s had little to do with Moore’s law of ever faster computing speeds, and it was more connected with the fact that this new approach to robotics involved the building of mechanical insects that didn’t bother with understanding or mapping the world, but merely responded to it as appropriate. It turned out that a clever artificial insect could accomplish much more than an extremely crude artificial human.

Recent advances by companies such as Boston Dynamics (e.g. the Spot Mini and Atlas models) demonstrate the next steps in these advanced physical capabilities, and although they cannot chat or solve any formal problems, their uncanny cognitive sophistication allows them to successfully navigate the real and unpredictable physical world, while older robots could only get around in synthetic environments, known as “toy worlds”, which were specifically designed for them, with high contrast lighting, and with shapes that have sharp edges and bright colours.

It’s not just in robotics that progress is accelerating. Neural networks can detect patterns – something traditional computers are very bad at –, and they can do it better and of course much faster than human beings. We have been connecting them to large databases, which they can mine in order to find correlations that were previously unseen and invisible to us. Feed them information on our buying habits, and they can predict better than a psychologist, and better than ourselves, what we are likely to buy next, either as a group or even as individuals. This is true for any other patterns of human behaviour. Neural networks can be connected to CCTV cameras, and now easily outperform human beings in facial recognition, not only in speed but also in accuracy, being able to spot an individual in a crowd of thousands in a split second. Before neural networks, it was possible to track someone 24/7, if you really wanted to, but it took large resources and a massive team of human specialists. Soon the technology will be in place to make it possible to track everyone, all of the time.

Developments in AI are starting to have a powerful disruptive effect in how we live and will live in the short to medium term future. There is good and bad, here. On the one hand we will see vastly increased economic efficiencies leading to overall greater wealth, in what will likely be a new industrial revolution. AI will take on jobs that are dirty, dangerous, or dull, and thus save lives and prevent human suffering. Some jobs will inevitably disappear, but history has shown us so far that new technologies have always end up creating more jobs overall than those they eliminate.

On the other hand, wars may become politically more attractive than they are now, as it will be easier to justify the loss of autonomous drones over the loss of soldiers’ lives, and we’re of course already seeing this with current remote-controlled drones. This can lead to more frequent and wider spread military action around the world by any countries with the resources to deploy these machines. As AI systems replace not just physical but increasingly also cognitively complex human labour, the rate of job loss is likely to be faster, for a while, than the rate at which the job market can adjust by creating new and different occupations. In addition, increasingly powerful data mining capabilities will start to have a very worrying impact on privacy.

The deeper concerns

Privacy issues get plenty of coverage these days, but the rise of autonomous weapons and the possible consequences of short and medium term job loss do not seem to be getting sufficient attention. Autonomous weapons are already in use by the military, even though they are not currently allowed to make kill decisions. Initially the focus was almost exclusively on the straightforward replacement of a person with a machine, while sticking to conventional designs, such as the fighter jet or tank. The UK’s Taranis or the US’s X-47B are good examples of autonomous planes. The X-47B has already shown it is fully capable of taking off and landing on an aircraft carrier autonomously and with more precision than a human pilot ever could. The game changer, however, may be the recent trend towards developing swarms of hundreds of expendable cheap drones that work together in coordinated groups, performing detailed surveillance tasks and overwhelming defense systems. Adding kill decision-making capabilities to current drones could be trivial from a technical point of view, depending on how accurate we want their decisions to be. This technology cannot continue to be unregulated, and there are good arguments for a complete ban.

The video slaughterbots, part of a campaign against lethal autonomous robots, shows how this is a pressing issue. Shot in the form of a sci-fi dystopia, it shows the very real power of these machines when governed by AI. Most importantly, although the particular drones in the movie don’t exist just yet, that’s only because no one has chosen to build them. The technology to make them autonomous is cheap and easy to obtain right now, by a rogue state, a terrorist group, or possibly a single individual. The movie shows autonomous drone swarms acquiring and destroying subjects autonomously, based on a database of political targets, without the orchestrator of the hit being traceable.

In addition to these concerns, there is also anxiety around ensuring that the benefits of these new technologies are fairly distributed. The increased wealth that can result from higher productivity will of course not benefit everyone, so there may be rising inequality, unless policymakers are prepared to take action. The issues that Karl Marx worried about (the risks arising from capitalists owning the means of production) will be amplified, as it can be argued that capitalists will now also own the mechanised labour. However, worst case scenarios will always get more attention in the press, so we need to be careful not to panic unnecessarily, and it is too early to say whether there will be a permanent rise in unemployment.

Preparing for opportunities and impacts

The advent of new technologies has been eliminating some jobs (and creating more than those that are lost) for a long time, so in a sense this is not a new phenomenon. It could be argued, however, that AI is different. It is more flexible than other technologies, and so it will hit more industries and displace jobs faster than new jobs can be created or workers can be retrained, although it is more likely that parts of each job will be done by an AI, rather than the entire job. There will be some notable exceptions, such as driving jobs, which could potentially be wiped out in the next twenty years or so.

Policymakers must start to seriously consider what solutions can be developed in order to deal with the temporary job losses that do seem inevitable. Some possible approaches that have been discussed are:

- Taxing robots for their labour (i.e. as they replace human labour) – This seems to be a popular idea in some circles, although governments have never taxed other productivity tools (whether they are hammers, laptops or more efficient marketing strategies) for the labour they displace. It seems unlikely in the competitive environment that is the global economy that any government would choose to handicap its economy like this. What is the intention, other than increasing corporate tax, and calling it something else?

- Increasing corporate tax everywhere – This is a possible solution if there is sufficient political will globally. This could only be carried out with global coordination (e.g. at WTO level), if at all possible, to avoid a loss of competitiveness if it happens at the level of a single state. If left to each government, no one has an incentive to do it. Possible but unlikely exceptions are very large economies (e.g. the US), with large enough internal markets that enable them to endure trade wars and remove themselves, to some degree, from exposure to global competition, choosing to live by different rules using a range of protectionist measures. It is clear that increasing tax revenue is a very difficult problem.

- Investing in skills – In addition to enabling greater social equality, any increased tax revenue that could be obtained could partly go towards government-sponsored retraining programmes. Up-skilling workers at a greater rate than ever before is vital to ensuring a robust and productive workforce, and helping more people to take part in the economies of the future. Policymakers will need to keep abreast of technological changes to make sure that such programmes are up-to-date and can genuinely prepare workers.

While we must be careful not to overstate the pace of development in AI – let’s not worry about a robot takeover just yet - there is certainly a potential for these AI-powered machines to radically transform the way we live. There are both great opportunities to live better, and worst case scenarios to prepare for that are far too real not to take seriously.

Professor Vasco Castela teaches ethics and philosophy of AI at the British Columbia Institute of Technology. He holds a Master’s in Philosophy of Cognitive Science (Sussex) and a PhD in Ethics (Manchester).

Many of the technological advancements discussed by Professor Castela have the potential to bring both benefits and negative consequences. For example, AI systems coupled with CCTV technology could positively contribute to society in a number of ways, but may also have severe implications for individual privacy.

The ability to intelligently monitor huge volumes of real-time video data would assist law enforcement to respond faster. A system able to analyse vast numbers of video feeds and alert officials to developing situations would be very valuable in a crisis. AI surveillance would also make it easier to gather evidence used to prosecute criminals (or for any other purpose). Intelligent systems that have the ability to analyse and understand the contents of a video (beyond the obvious metadata, such as timestamps) would make it easier to search for footage featuring a certain person, vehicle, act, location or other characteristic.

However, the potential impact on privacy is clear. Although CCTV today is widespread only a small fraction of footage can be actively monitored in real-time. Were AI to be used this fraction could increase significantly, and it may be possible to more easily track an individual using both real-time and historical footage. Policymakers are increasingly grappling with regulations that define how technology companies can store and use data on user activity online, where it can be easily tracked. If AI and CCTV combined enable the ability to track a person's activity in the real world, it is important that the use of this data is also regulated effectively.

In employment

As has been discussed, predictions on the scale of the effects on employment differ significantly, although most studies show that it is highly likely that many new types of role will be created. These roles will be in both new areas and in occupations that undergo forms of segmentation and specialisation.

By studying how newly introduced technologies and industrial changes have affected jobs and employment arrangements in the past, it is possible to better prepare businesses and policymakers for the future.

Fairer working arrangements for a digital world

By Professor Tony Dundon

The explosion in new forms of business model enabled by innovative technologies and digital platforms raise questions about the ‘future of work and employment’. These concerns centre not just on how to make activities more efficient, profitable or productive, but also relate to public policy debates about whether we are witnessing the end of conventional employment patterns for large sections of society and, if so, what alternative arrangement may be available.

While debates and policy preferences differ widely (from those worried about the consequences of widespread work displacement to those who welcome labour being substituted by automation) it is widely recognised that technology in its digitalised form is driving change. This section questions the extent of digital platform modes of employment and discusses how genuinely ‘new’ such arrangements are.

The longevity of work design systems

The key principles for the structure of work underpinning digital platforms are not necessarily that new. Many of the organisational features about digitalisation and work actually mirror some of the structural systems of early capitalism. Key features include:

- Work tasks that are performed on-demand, similar to zero hours contracts. Workers often spend time ‘hanging around’ or ‘searching for gigs,’ and this is time spent without remuneration making ‘platform work’ precarious, insecure and unpredictable.

- Pay is often casual, based on a piece-rate or payment-by-results system.

- Workers typically have to supply their own equipment (examples are a bike for Deliveroo workers, a car for Uber drivers and a computer for performing online tasks for many worker segments).

- Intermediaries are often used for connecting workers with end-users. In previous periods such intermediaries would have been known as gangmasters, local market recruiters etc.

These are issues of power, control and coordination which mean there are risks. Such working arrangements can make for more unstable, unpredictable employment regimes that have wider societal spin-offs affecting welfare, security and insecurity, social issues and economic wellbeing of regions, areas and occupations.

Is technological change inevitable?

When viewing the pace of its progress and development it can seem as if technology - and its digital platform additions – is somehow neutral, or that change is inevitably technologically determined. It is not.

The type of technologies used for different tasks, and their subsequent effects on work and employment regimes, are shaped by the decisions of stakeholders who typically occupy positions of power and authority, and who control resource allocations. When employers use cheap labour instead of more expensive - and potentially more productive - technology it is often because they are choosing lower labour costs over higher infrastructural investments. When it comes to digital work platforms and new technologies (whether considering Uber, robotics or automation in manufacturing), the primary motives are increasing value, efficiency and profit. Rarely is the social good or societal gain part of the strategic rationale.

Significantly, under digital platform work the intermediary relationships are governed by technology; namely the app or computer interface that supports the new business model. Often instead of empowering the worker such intermediary platforms result in an uncertain contractual status as to who is a worker, employee, freelance agent or those genuinely self-employed. The net effect is the dilution of legal rights and exclusion of protections for large and growing sections of the labour force.

This also results in a change to psychological contracts and social exchange relationships; people now interact and communicate via technology and no longer in person. One consequence can be a lack of opportunities for social development and for employee voices to be heard.

Technology erodes standard employment arrangements